Can open source be too open?

Lessons from a decade of cloud infrastructure projects

Few topics in 2023 are more schismatic than open source LLMs. To some, they represent a noble crusade against closed source model providers. To others, they are the ill-guided equivalent of trying to publish nuclear weapon design specs on the internet. Either way, companies building open source models and LLM infrastructure such as Mistral and Together are amassing capital and talent.

What is intriguing is that, in parallel, many of the open source darlings of the last decade are reassessing their openness. Redhat and Hashicorp opted to restrict their open source licenses this summer. Mongo, Elastic, Cockroach, Sentry, and MariaDB did the same a few years ago. Docker sunset its open source offering almost entirely. The Confluent and Databricks hosted clouds resemble open source Kafka and Spark less and less. Even in AI, the share of models with permissible open source licenses on Hugging Face is plummeting by some measures.

What’s going on? Have open source cloud infrastructure companies figured something out that open source LLM companies should pay attention to?

Yes, in short. The evolution of open source cloud infrastructure over the last decade suggests several lessons for open source LLM start-ups:

Don’t hang your product differentiation on open source: Customers want to buy delightful product experiences. Openness is rarely the most important factor in procurement decisions. Almost all category defining open source companies would still be the preferred vendors in their space even if their software was proprietary, though open source can sometimes help support a long tail of features (e.g. Airbyte vs Fivetran) or enable a novel GTM. That is why Mongo, Hashicorp, etc were able to switch to more restrictive licenses with little discernible impact on their commercial prospects. The best open source LLM companies will likewise win because they solve an AI infrastructure problem better than everyone else, not because they publish their model weights.

Your business model should dictate your openness, not the other way around: While open source can be a powerful GTM tactic, it isn’t a substitute for a business model: on average, open source companies don’t enjoy better distribution than closed source ones. On the contrary, open source companies can find themselves in uncomfortable positions when the public availability of their code curtails monetization opportunities. Avoiding these sticky situations means orienting your open source strategy around how you plan to commercialize your product from the get-go.

Open source cloud infrastructure GTM strategies may not translate apply to open source LLM companies: Developer-led growth and monetizing through cloud hosting is the dominant commercialization playbook for open source cloud infrastructure projects. These tactics may need to be revised for open source LLMs, which don’t necessarily enjoy the same ease of adoption advantages

I’ll walk through each of these by disproving an open source “myth”, showing how the lesson manifested in the cloud infrastructure era, and suggesting what open source LLMs might learn.

Don’t hang your product differentiation on open source

Myth: Open source is not a magic switch for increasing developer distribution or accelerating product velocity.

A common argument for the virtues of open source businesses is that they boost distribution (since they are free to use and alleviate lock-in concerns) and product velocity (since they can draw on the engineering talents of their developer communities). But, looking across some of the biggest publicly traded cloud infrastructure companies, this doesn’t appear to be the case.

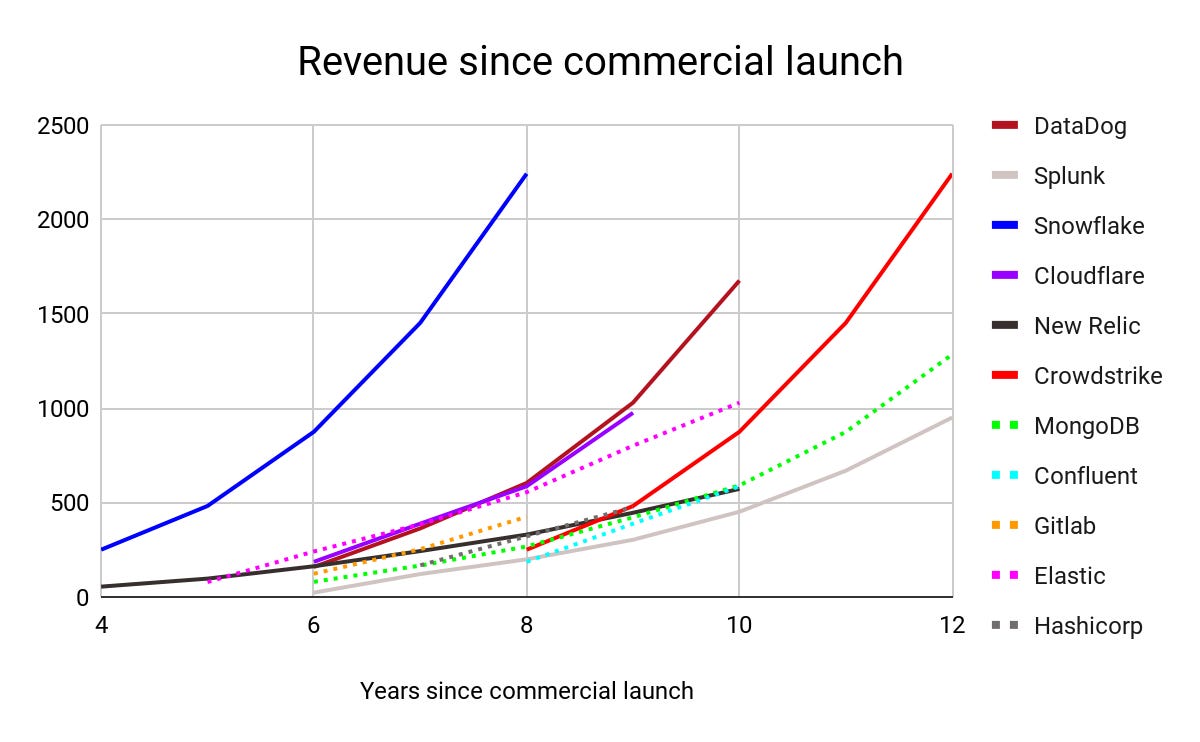

The median time for open source businesses to reach $500M in revenue (9.2 years) is similar to the median time for closed source businesses to reach that scale (9.4 years).

Likewise, the revenue ramps of the open source companies aren’t steeper than those of closed source.

And if open source projects ship faster, it doesn’t show up as stronger cross-sell capabilities. The NDR for public open source companies sits squarely between the most retentive infrastructure companies in our sample (Snowflake, Datadog) and the least (Cloudflare, Dynatrace, Splunk).

Lesson from cloud infrastructure: Enterprises rarely buy software because it is open source.

Open sourcing a project isn’t a substitute for an effective market because paying customers care far more about product capabilities than open source branding. Indeed, after years of struggling to monetize their open source projects, some companies are finding that going closed source actually helps their commercial prospects. They can more than offset the customers who churn in reaction to the project becoming closed source by better monetizing retained customers.

Docker is the poster child. After failing for years to turn its wildly popular open source project into a large business, Docker simply started charging users for its product. While doing so effectively ended Docker’s status as an open source project, growth popped: ARR jumped from $11M in 2020 to $135M in 2022.

One could argue that Docker could only move to value extraction mode because it had built strong open source brands in the first place. But, if jettisoning that brand didn’t matter much, we must conclude that product capabilities trumped open source proclivities when customers voted with their wallets. Depending on the product context, open source GTM can be neutral or even counterproductive.

Learning for open source LLM companies: Building killer functionality for enterprises is more important for product differentiation than an open source license.

I doubt that the tie-breaking variable for buyers evaluating competing LLM infrastructure offerings will be whether one is open source. Product experience, security, auditability, and ease of use matter more.

Every scaled cloud infrastructure company is successful because it is an enterprise company. The majority of their revenues come from large customers. Infrastructure start-ups that don’t build with that eventuality in mind from the early days can trap themselves at the bottom of the market (e.g. RethinkDB, Cloud9). By contrast, breakout companies like Mongo and Databricks prioritized enterprise features relatively early in their development (for instance, Mongo introduced SSL in 2014).

Open source LLM companies will need to figure out what enterprises are willing to pay for — a data security layer? latency guarantees? — and bake that into their product DNA.

Your business model should dictate your openness, not the other way around

Myth: You can’t always back solve for a good business from a popular open source project.

Over the last decade, there have been hundreds of open source projects that received tens of thousands of Github stars and similar downloads. A number of these received venture funding. Only a small handful became great companies. In fact, whether you cut the data by IPO or $1B+ acquisition, there have been far fewer major open source cloud infrastructure exits over the last decade than closed source ones. Open source companies can create fantastic enterprise value, but the underlying projects need to be tightly coupled with (and steered by) a sustainable business model.

Lesson from cloud infrastructure: Open source software companies can often best monetize with a “freemium” business model.

Open source projects that naturally push users into a “freemium” hosted cloud offering display the best business genetics: whet the appetites of users with a free tier and then convert them to revenue-generating customers with a more robust paid tier. That GTM approach necessitates a large capability gap between the free (open source) and premium (hosted) offerings in order to maximize user willingness to pay.

Databricks’ and Confluent’s recent product updates are illustrative. Both decided to largely swap out the underlying open source architectures that powered their hosted services and replace them with new, proprietary serverless engines. This makes sense. Confluent Cloud and Delta Lake are now true serverless platforms rather than managed hosting of open source code. The question for prospective customers is no longer “can I host Kafka / Spark myself?” but “can I build a serverless data streaming / lake solution myself?“ – an even stronger reason to buy, not build. Closed source hosted offerings benefit from the further virtue of not being forkable by AWS.

Learning for open source LLM companies: Open source models can be an on-ramp for closed source ones.

Analogous to Databricks’ and Confluent’s evolution towards a “freemium” paradigm, an effective monetization strategy for open source LLM companies may be to offer a less powerful open source “free tier” model as an on-ramp for a more powerful closed, hosted “premium” model. Indeed, one possibility for why Meta open sourced the Llama model family is that it is attempting to create a model standard ahead of upselling customers with a hosted, closed source solution (remember, Meta has one of the world’s largest GPU clusters). That would explain why Meta is keeping the best Code Llama model (“Unnatural Code Llama”) proprietary.

Open source cloud infrastructure GTM strategies may not apply to open source LLM companies

Myth: On average, open source doesn’t enable a more efficient developer acquisition motion.

In reality, open source paybacks are a touch longer than closed source (29 versus 24 months) for public software infrastructure companies while S&M as a share of revenue is higher for open source.

Note: First chart is courtesy of Redpoint.

Lesson from cloud infrastructure: To avoid being killed, open source software infrastructure companies must effectively counterposition against the public cloud providers.

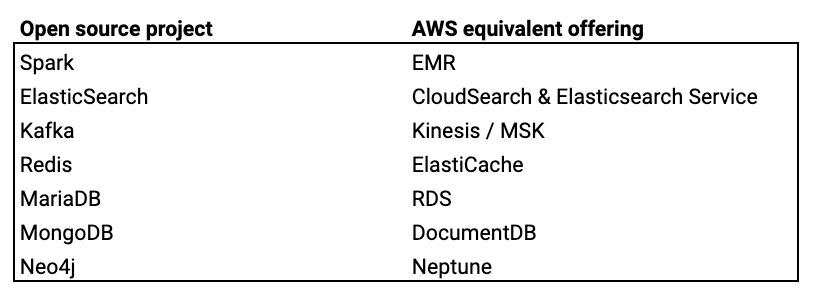

A key reason why open source CAC isn’t lower is that successful open source projects inevitably attract the attention of would-be competitors (most notably, the public clouds) looking to re-sell the freely available code as a hosted offering themselves. AWS offers a hosted SKU of nearly every popular open source infrastructure project.

To survive, open source cloud infrastructure companies had to learn how to stand out as the go-to cloud solution for their hosted service. Multicloud and targeted marketing were pieces of that strategy. Rolling back overly permissive open source licenses was another. In 2018, Redis and MongoDB changed their open source licenses to be more restrictive. The new licenses (Commons Clause and SSPL, respectively) differ slightly in their implementations but the upshot is the same: other service providers can’t sell products that commercialize the underlying source code. Elastic followed suit with an SSPL license of its own in 2021.

Another popular class of restrictive license is the BSL, which limits commercial use of a project beyond some defined metric (e.g. number of servers run in production). MariaDB pioneered the trend in 2016. CockroachDB and Sentry did the same in 2019 and, most recently, Hashicorp adopted a BSL in 2023.

Though these changes ignited a furor with some developers, they helped ensure their respective projects stayed the destination for running an open source service.

Learning for open source LLM companies: LLM infrastructure companies will also need to counterposition against the public cloud providers. Doing so may be harder.

It may be dangerous for open source LLM companies to overlearn the GTM lessons of their cloud infrastructure forebearers. For cloud infrastructure projects, a developer-led GTM motion was enabled by open source’s relative ease of adoption compared to proprietary solutions: enterprise buyers often view open source as reducing lock-in, appreciate the ability to customize source code, and enjoy being able to get started without involving salespeople. These advantages may not translate for open source models:

Developer-led sales could get trickier due to security and compliance concerns. LLMs are only as good as the data they can access, which means that developers might find it difficult to try them off-the-shelf and evaluate their effectiveness without going through a full IT review.

Lock-in with closed source offerings might end up being less of a concern for buyers since swapping out a model API is easier than migrating to a new infrastructure software solution.

Early open source efforts (Llama, StarCoder) are doing an impressive job training reasonably performant smaller models. But the jury is out on whether self-hosting will be a practical option for models with advanced reasoning capabilities. Running distributed GPU workloads is a tricky problem. DIY could be more effort than it's worth.

Monetizing via cloud hosting might also be tougher for open source LLM companies. Much as users might choose to host an open source database on AWS instead of the company that wrote the code for it, training a great model doesn’t guarantee that users will want to pay you for fine tuning and inference. The public clouds and OpenAI are already investing billions to become defaults for the latter. Earning the right to run LLM workloads won’t be easy.

Conclusion

Publishing source code is a business tactic, not a product identity. No breakout cloud infrastructure company defined their mission as being an open source version of a closed source competitor. It took a decade for giants like Mongo, Hashicorp, and Elastic to thread the needle between cultivating developer love and earning revenue from those users.

Unfortunately for open source LLM companies, I doubt they can fork this playbook. The DNA of LLMs is too different from databases. These start-ups should start thinking about the new GTM tactics they’ll need to develop to adapt to avoid angering their users with restrictive license “rug pulls”.

Outsourcing business strategy to open source is a losing game.

Thank you to James Alcorn, Alex Mackenzie, Greg McKeon, Anuj Mehndiratta, and Veeral Patel for their thoughts and feedback.